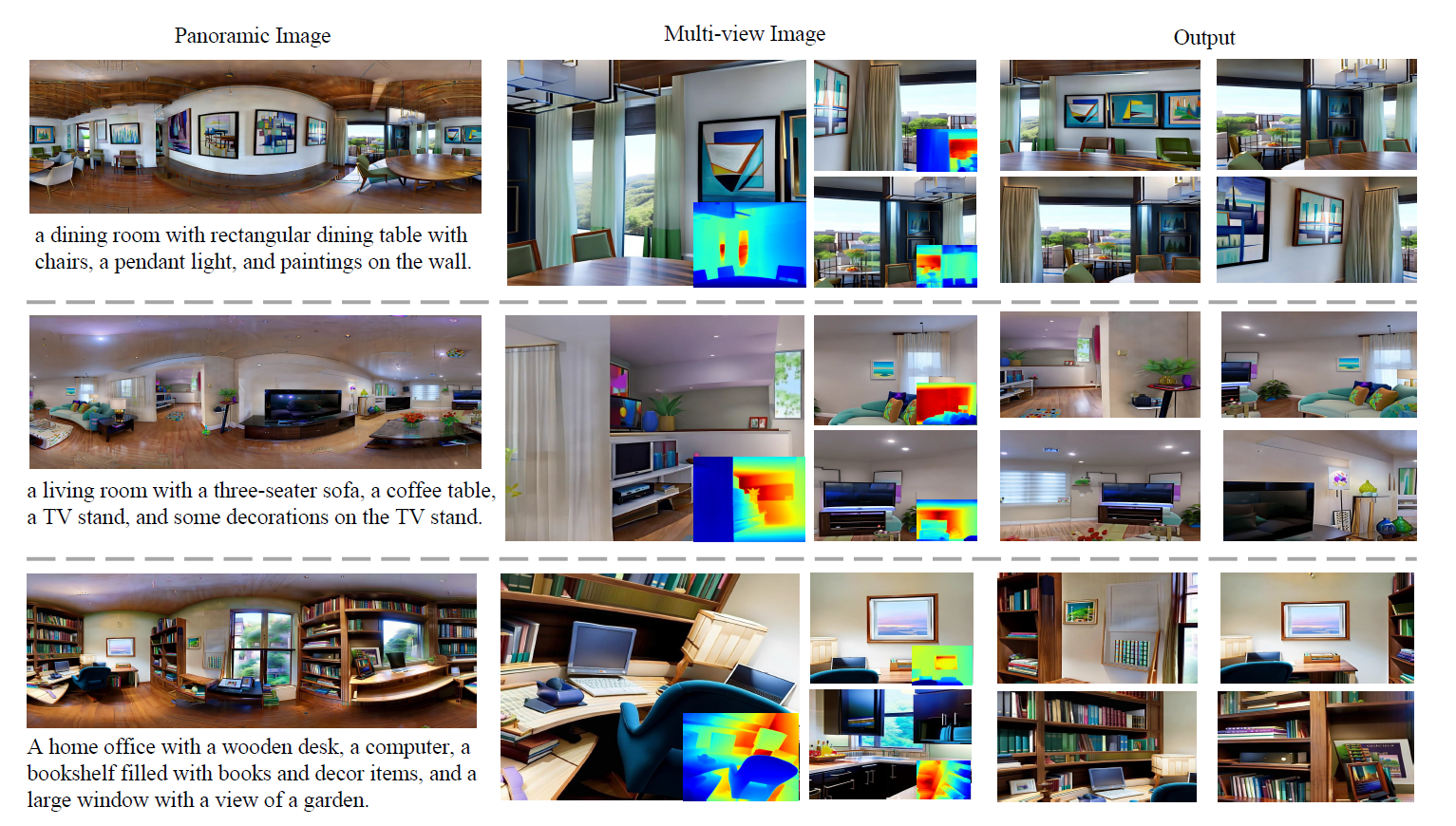

a dining room with rectangular dining table with chairs, a pendant light, and paintings on the wall

a living room with a three-seater sofa, a coffee table, a TV stand, and some decorations on the TV stand

a kitchen with an island, bar stools, hanging cabinets, built-in oven and microwave, and some kitchen utensils on the countertop

A reading nook with a comfortable armchair, a small bookshelf, a floor lamp, and a side table with a cup of tea. Large windows with flowing curtains provide natural light.

A home office with a wooden desk, a computer, a bookshelf filled with books and decor items, and a large window with a view of a garden.

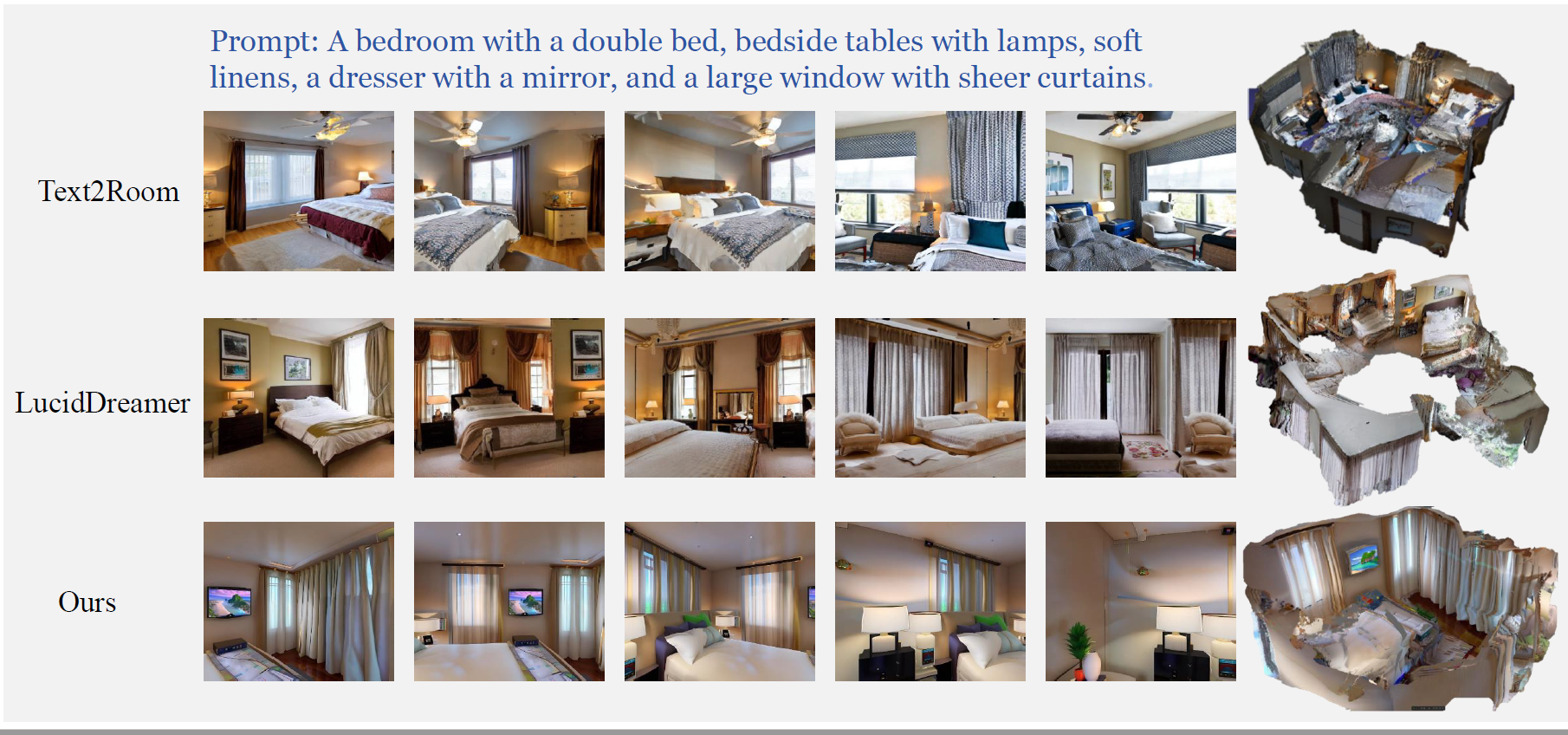

A bedroom with a double bed, bedside tables with lamps, soft linens, a dresser with a mirror, and a large window with sheer curtains.

A bathroom with a glass shower, a freestanding bathtub, a single sink with a mirror, white tiles, and a towel rack.

A dining area with a round table, four chairs, a pendant light, and a sideboard with decorative items and plants.

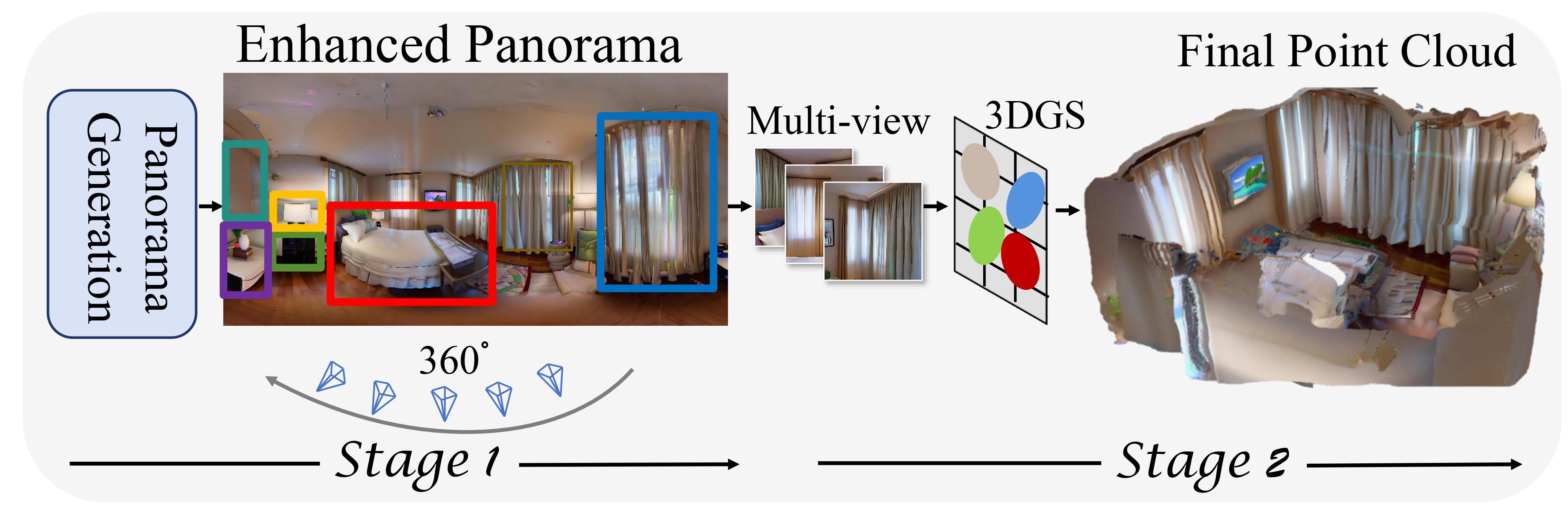

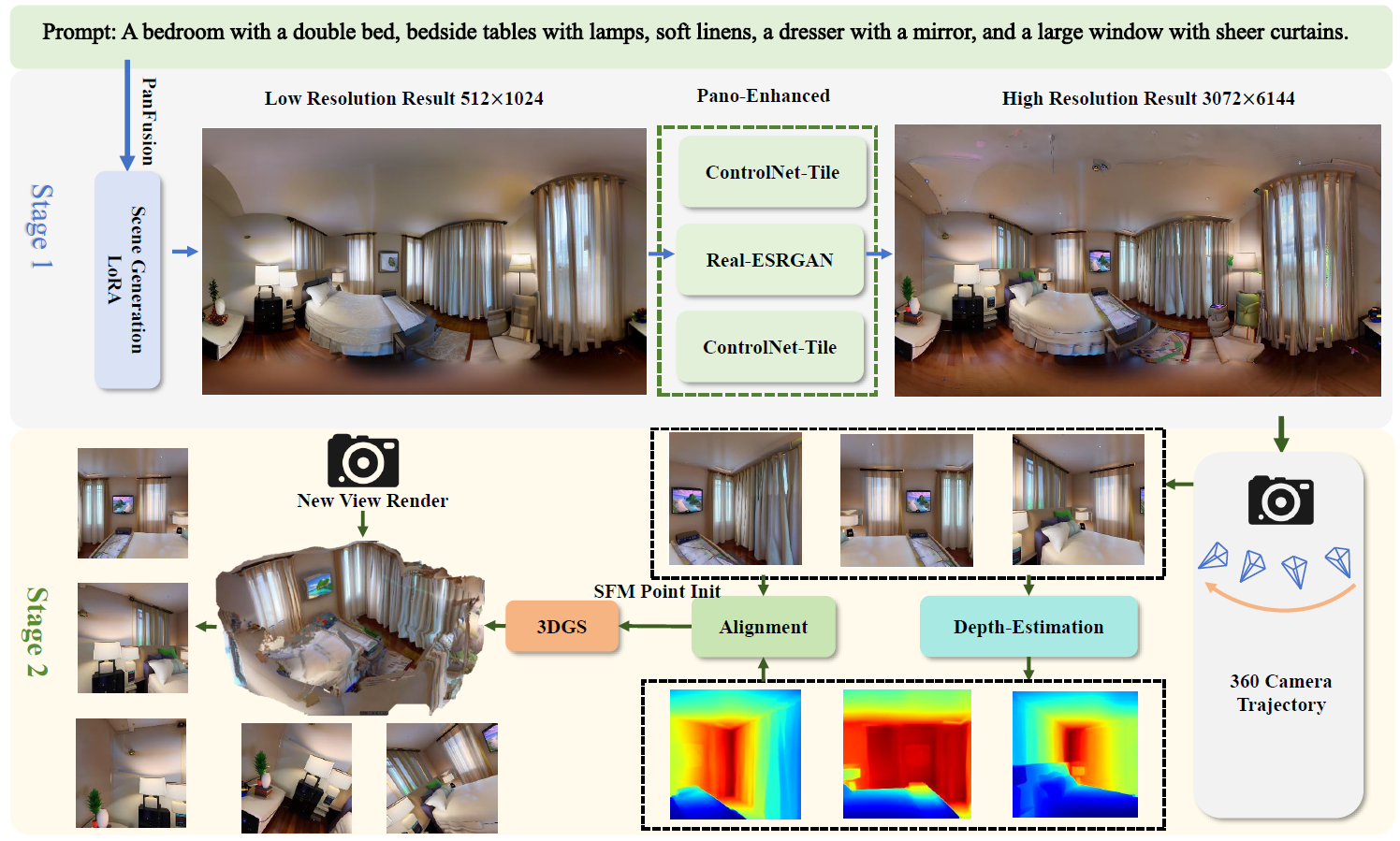

Text-driven 3D scene generation has seen significant advancements recently. However, most existing methods generate single-view images using generative models and then stitch them together in 3D space. This independent generation for each view often results in spatial inconsistency and implausibility in the 3D scenes. To address this challenge, we proposed a novel text-driven 3D-consistent scene generation model: SceneDreamer360. Our proposed method leverages a text-driven panoramic image generation model as a prior for 3D scene generation and employs 3D Gaussian Splatting (3DGS) to ensure consistency across multi-view panoramic images. Specifically, SceneDreamer360 enhances the finetuned Panfusion generator with a three-stage panoramic enhancement, enabling the generation of high-resolution, detailrich panoramic images. During the 3D scene construction, a novel point cloud fusion initialization method is used, producing higher quality and spatially consistent point clouds. Our extensive experiments demonstrate that compared to other methods, SceneDreamer360 with its panoramic image generation and 3DGS can produce higher quality, spatially consistent, and visually appealing 3D scenes from any text prompt.

The architecture of the SceneDreamer360. SceneDreamer360 generates an initial panorama from any open-world textual description using the fine-tuned PanFusion model. This panorama is then enhanced to produce a high-resolution 3072 × 6144 image. In the second stage, multi-view algorithms generate multi-view images, and a monocular depth estimation model provides depth maps for initial point cloud fusion. Finally, 3D Gaussian Splatting is used to reconstruct and render the point cloud, resulting in a complete and consistent 3D scene.

We visualize the generated scenes using various prompts, as illustrated in the following figure. The method successfully generates 3D spatial scenes consistent with the text prompts.Additionally, part of the output demonstrates that images can be rendered from arbitrary trajectory views, showcasing the generalizability of our method.

We visually compared our method with LucidDreamer and Text2Room using identical spatial text prompts, as shown in the following figure. Our method outperforms the others by producing more consistent, complete, and detailed 3D spatial scene point clouds.The use of 3DGS enables superior detail and continuous object surfaces, surpassing Text2Room in clarity. Additionally, our Stage 1 panoramic optimization captures more spatial details, and our designed rendering trajectory generates more comprehensive scenes than LucidDreamer.

We would like to express our sincere gratitude to the authors of the following papers whose work has significantly contributed to our research:

Panfusion introduced a model capable of generating scene-consistent panoramic images, which acted as a prior for our scene generation.

3D Gaussian Splatting provided significant assistance for the realistic 3D scene reconstruction part of our work.

LucidDreamer and DreamScene360 offer many valuable lessons for our work. They are good works.

@article{li2024scenedreamer360,

title={SceneDreamer360: Text-Driven 3D-Consistent Scene Generation with Panoramic Gaussian Splatting},

author={Li, Wenrui and Mi, Yapeng and Cai, Fucheng and Yang, Zhe and Zuo, Wangmeng and Wang, Xingtao and Fan, Xiaopeng},

journal={arXiv preprint arXiv:2408.13711},

year={2024},

}